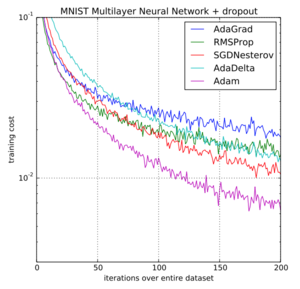

A Visual Explanation of Gradient Descent Methods (Momentum, AdaGrad, RMSProp, Adam) | by Lili Jiang | Towards Data Science

Gentle Introduction to the Adam Optimization Algorithm for Deep Learning - MachineLearningMastery.com

From SGD to Adam. Gradient Descent is the most famous… | by Gaurav Singh | Blueqat (blueqat Inc. / former MDR Inc.) | Medium

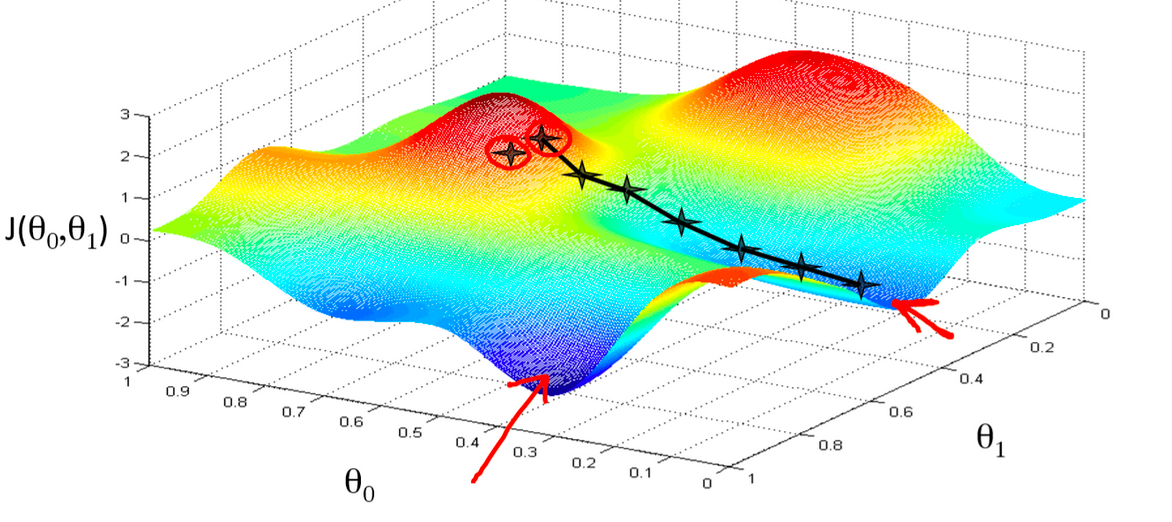

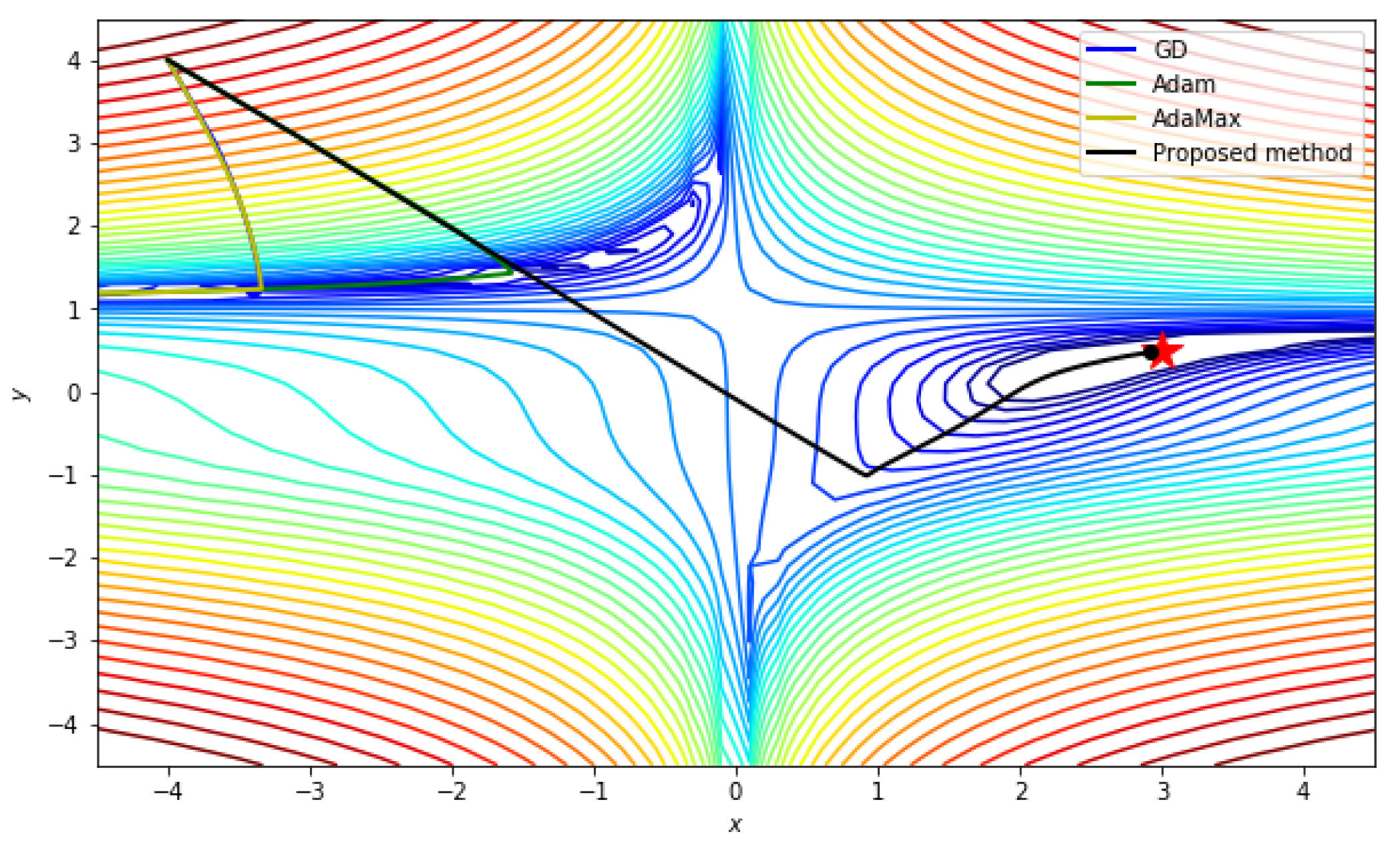

Applied Sciences | Free Full-Text | An Effective Optimization Method for Machine Learning Based on ADAM

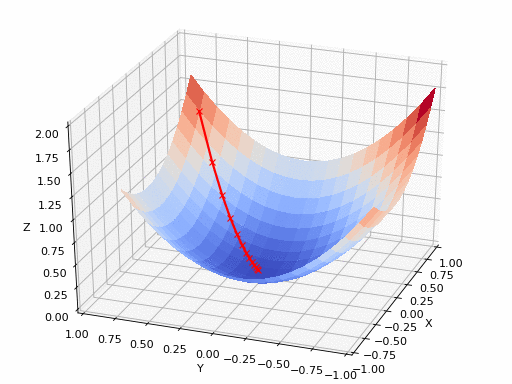

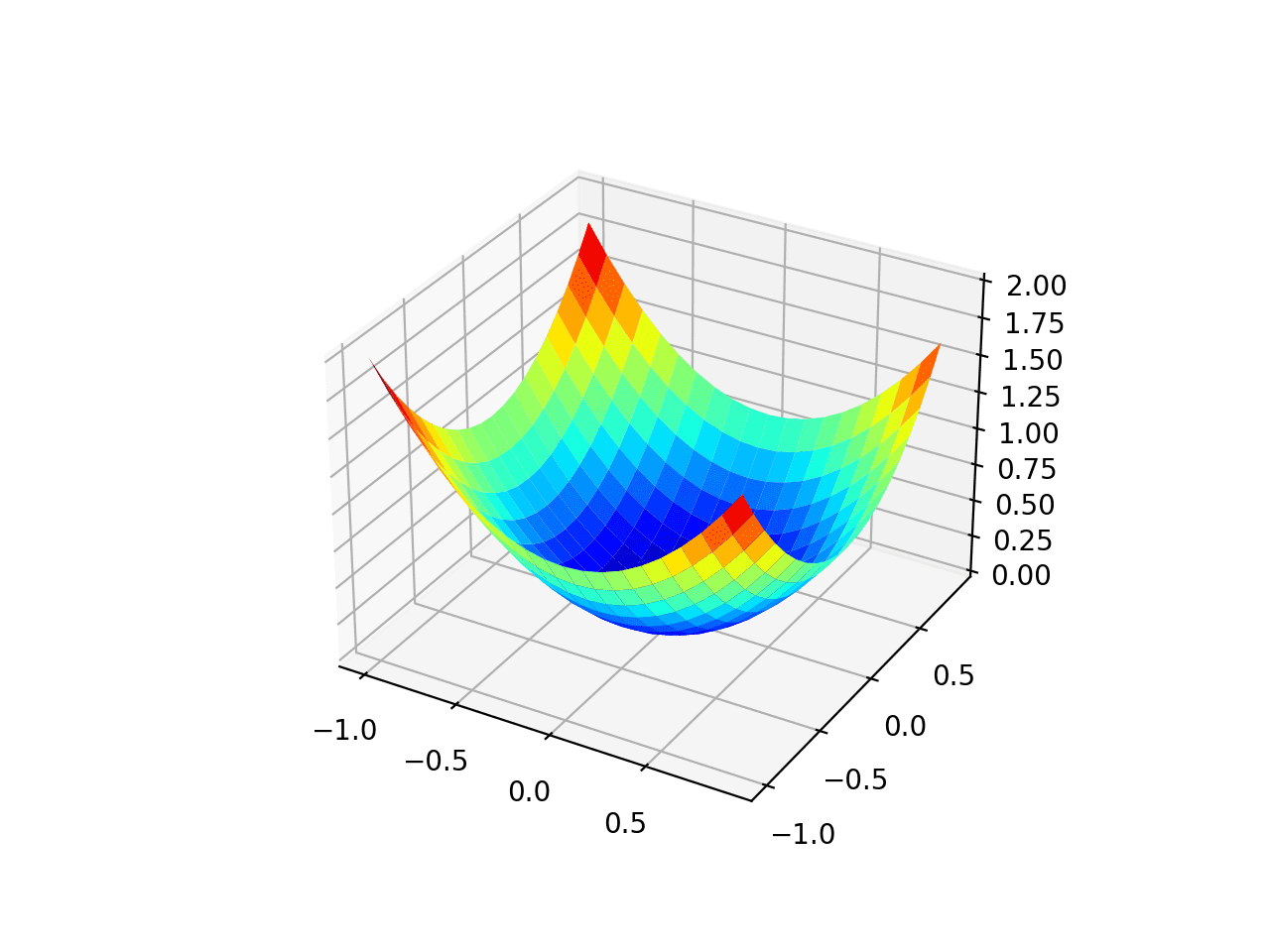

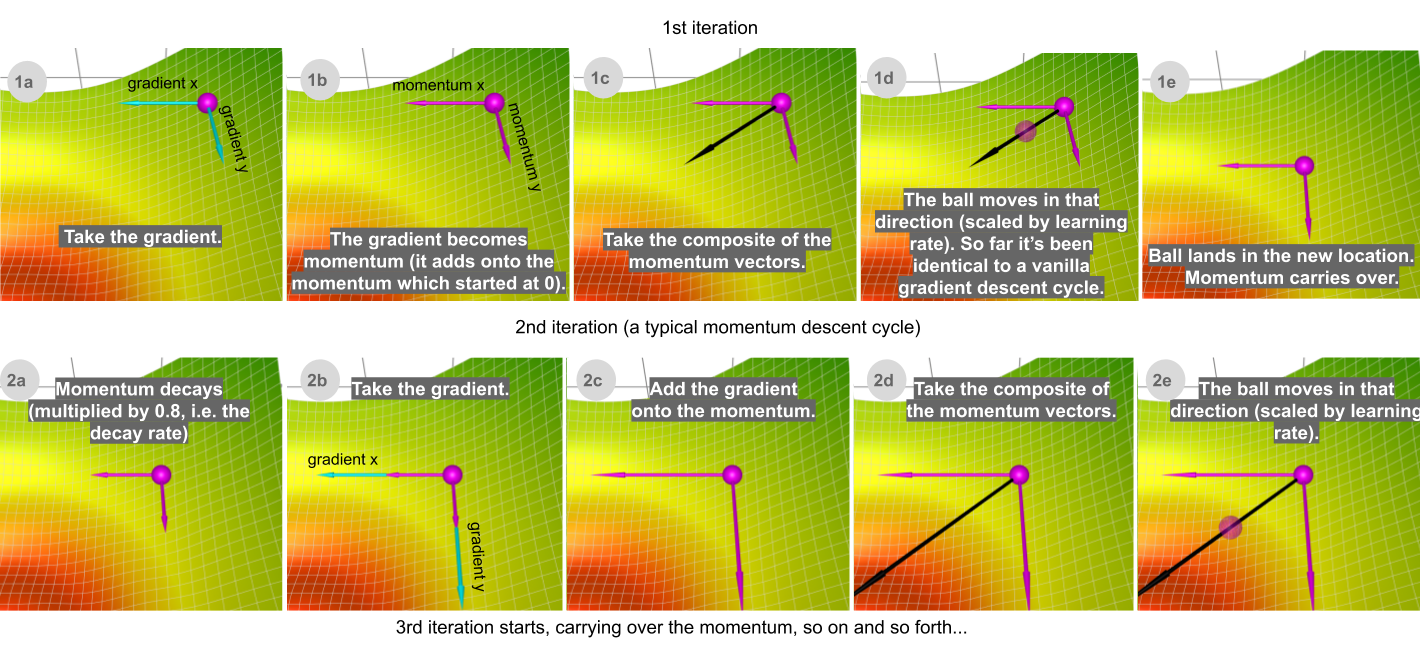

A Visual Explanation of Gradient Descent Methods (Momentum, AdaGrad, RMSProp, Adam) | by Lili Jiang | Towards Data Science